Audio latency refers to the delay between the time a sound is produced and when it is heard. It is a measure of the time it takes for an audio signal to travel through a system, including audio processing, conversion, and playback. Latency can occur in various stages of audio production and playback, and it is particularly relevant in digital audio systems.

There are several factors that can contribute to audio latency:

1. Analog-to-Digital Conversion (ADC) Latency:

- When converting analog audio signals into digital format, there is a slight delay introduced by the ADC process. This latency is usually very low and may not be noticeable in most cases.

2. Digital Signal Processing (DSP) Latency:

- When audio signals are processed digitally, such as applying effects or equalization, there is a processing time required for the computations to be performed. This introduces additional latency depending on the complexity of the processing and the capabilities of the hardware or software being used.

3. Buffering Latency:

- To ensure smooth playback and prevent audio dropouts or glitches, audio systems often use buffers. Buffers temporarily store audio data and allow for consistent playback, but they introduce a delay. Larger buffer sizes result in greater latency but provide better stability.

4. Transmission Latency:

- In networked audio systems or when streaming audio over the internet, there can be latency introduced during transmission. Network congestion, data compression, and other factors can contribute to increased latency in these scenarios.

5. Digital-to-Analog Conversion (DAC) Latency:

- Similar to ADC latency, when converting digital audio signals back to analog format for playback, there is a slight delay introduced by the DAC process. This latency is usually very low and may not be noticeable in most cases.

Audio latency becomes more noticeable and problematic in real-time audio applications, such as live performances, recording, and interactive applications like gaming or virtual instruments. High latency can result in a perceptible delay between performing an action (e.g., playing an instrument or speaking into a microphone) and hearing the sound, leading to a disruptive user experience.

To minimize audio latency, several strategies can be employed:

1. Use Low-Latency Hardware and Drivers:

- High-quality audio interfaces with low-latency drivers can reduce the overall latency in the audio chain.

2. Optimize Software Settings:

- Adjusting buffer sizes in audio software and selecting lower latency modes or settings can help reduce latency.

3. Use Direct Monitoring:

- When recording audio, utilizing direct monitoring allows the performer to monitor their input signal directly through the audio interface, bypassing software processing and reducing latency.

4. Minimize Signal Processing:

- Reducing the number of effects, plugins, or other processing modules can help minimize latency introduced by DSP.

5. Optimize Network and Streaming Setup:

- In networked audio setups, optimizing network configurations and using protocols designed for low-latency audio transmission, such as Audio over Ethernet (AoE), can help minimize latency.

It's important to note that achieving extremely low latency may require a combination of optimized hardware, software, and system configurations. The specific latency values that are considered acceptable or noticeable vary depending on the application and user preferences.

Audio latency has been one of the biggest issue that I have experienced when recording virtual instruments in a DAW. The effect of latency can be compared to how physically far you are from a guitar amp. Each millisecond of latency is roughly equal to being one foot away from your guitar amp. So a 10 ms delay is like playing 10 feet from your guitar amp. Once you are farther than 10 feet from your amp you might start to notice the delay between when you play your guitar and when you hear the sound coming out of your amp. For most guitar players the delayed sound affects their timing and performance. Guitarist need the guitar amp 10 feet (10ms) or closer so everything feels right.

Latency can be reduced on a computer when you select a lower buffer size or a higher sample rate. The problem is that these setting will put more strain on your CPU, and you might hear pops and clicks in your recording, which can end up being worse than the effects of latency.

So the line between low latency and audio glitches rely on your CPU power, audio buffer settings, sample rate, and most importantly driver performance.

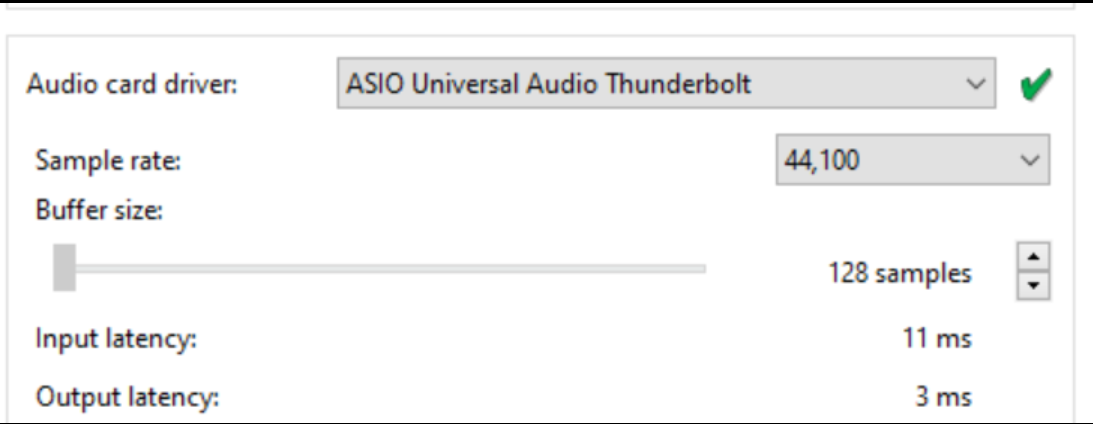

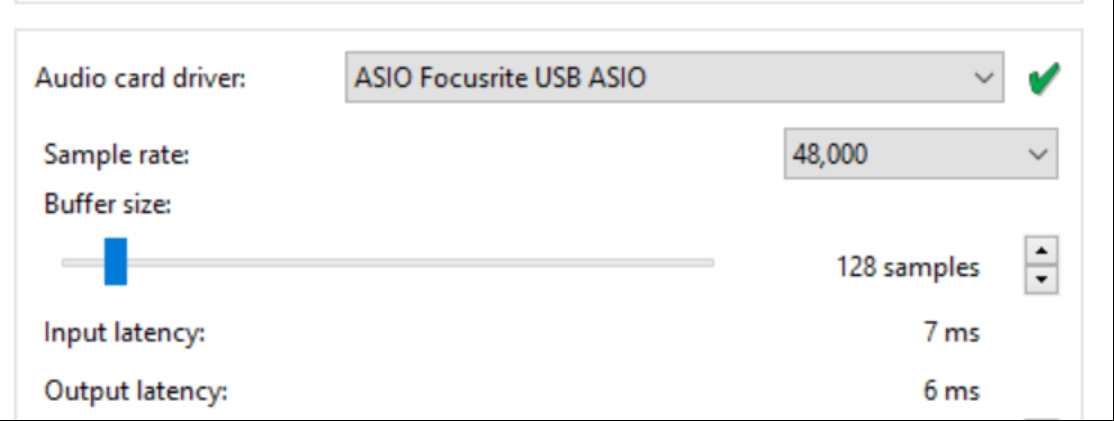

The best way to reduce latency is to use an external sound card that has stable drivers and make sure your computers single thread performance is high. Two recommendations are the Focusrite Scarlett USB devices (3rd generation or higher https://focusrite.com/en/scarlett), or the Universal Audio Apollo Thunderbolt 3.0 devices (https://www.uaudio.com/audio-interfaces.html). Both of these devices offer low latency and stable drivers. Just avoid 2-in-1 laptops when using a Thunderbolt 3.0 device.

You can check your computers single thread performance at the PassMark CPU Benchmard Single Thread Performance list.

Universal Audio Arrow settings

Focusrite Scarlett Solo (3rd generation) settings

Optimising The Latency Of Your PC Audio Interface

The Truth About Digital Audio Latency

Latency Issues with Interfaces

Audio latency changes in Windows 10

Optimising your PC for Audio on Windows 10